Table of Contents

Long Context Trends in the Enterprise: 7 Common Use Cases From the Field

In 50 meetings with enterprise clients over the last three months alone, the use cases they describe wanting to build are what we in the field call long context use cases: Tasks that are data-heavy and require a model to be able to ingest a large amount of information in order to produce an accurate and thorough response.

This market trend is confirmed by the increasingly longer context windows we’re seeing with each subsequent language model release, with some models starting to push into the millions of tokens—a huge leap from the 4K or 8K token counts of barely two years ago.

A big demand for this rise in context window length comes from the business world, where long context is instrumental in scaling applications to support enterprise workflows. It’s the difference between a developer feeding a model the file they are working on versus their entire organizational codebase. The scale—and the resulting intelligence—is incomparable.

However, with context windows always listed in tokens, the practical difference between a 32K and 256K model, for example, is not always clear. Companies want to know: How many financial reports does that add up to? How many interactions in a customer support chat? And, of course, what tangible value does the ability to fit so much context have on model output?

In this blog, I want to spend some time explaining the value of long context, before sharing the top 7 long context use cases I see across the financial services, health care and life sciences industries, as well as cross-industry customer support use cases—plus a bonus prompt example and cost and latency breakdown for an earnings call summarization use case. In each use case, I’ll share how long context solved a core business challenge, bridging the journey from ideation to production.

Context window 101

Context window is the total input and output data that a model can ingest at any given moment. It’s a moving target, though, so as soon as the context window fills up, the model will start losing access to the data that came at the start.

People often use the metaphor of being in a (very) long conversation, and starting to get hazy about how or where you started off once you’re an hour in.

Because of the finite nature of context windows, the enterprises I speak with care a lot about having a model whose long context can adequately support the information they need the model to ingest and process. In particular, long context offers enterprises greater customization and better quality output, while optimizing for cost.

Model output customized to your organization

During pre-training, an LLM is exposed to vast amounts of general data that instill it with some basic context and also help it develop strong reasoning skills. Yet to successfully answer questions that are highly particular to a company and their operations, the model will need a bit more information: your unique organizational nomenclature, brand guidelines, customer service protocol, refund policies, and more.

This is information you don’t want the model reasoning from its training data, and the model needs the ‘room’ in its context window to fit this information, along with the prompt.

More accurate and contextualized output

Long context allows a model to apply good research skills. Just as someone who has read just one article about a topic will not give answers that are as satisfactory and in-depth as someone who has read a whole book on the same topic, a model with a smaller window will always be limited in the number of documents it can process to generate an answer. While workarounds, such as summarization mechanisms, exist for breaking long documents into shorter chunks, these strategies are less reliable than models trained to handle long context from the outset.

For question answering or summarization tasks on lengthy documents or a large number of documents, a model that can take in more context will have higher success producing an answer that is both accurate and also makes sense in its broader context.

Optimized costs

As many enterprises with RAG systems know, the costs of these solutions can quickly balloon when reviewing and synthesizing large quantities of information. Limiting the amount of snippets retrieved could be one workaround—but it risks missing out on critical information or context. Not to mention, for industries who rely on documents that regularly reach 100+ pages, such as finance, quickly filling up a context window is inevitable.

For those cases, using a cost-effective, high-performing long context model alongside your RAG system is essential for keeping costs in check. Not only is Jamba-Instruct one of the few models on the market to maintain impressive performance on long context use tasks across its entire 256K context window, it also offers this massive context window at one of the best price points on the market, making it an attractive option for companies who want both a reliable and cost-effective RAG solution.

Top long context tasks for the enterprise

A long context model, like AI21 Labs’ Jamba-Instruct, which has an effective context window of 256K, can help enterprises with the following:

- Multi-document analysis: Summarize or compare across multiple documents at once to identify key points and insights.

- Multi-document question answering: Query multiple documents, records, or policies in a database.

- Organizational search assistant: Improve the retrieval stage of a RAG system for organizational data, resulting in higher quality answers.

- Risk mitigation: Inject a prompt with complex and detailed instructions to guide its response, especially important in highly-regulated industries like finance and healthcare.

In the following paragraphs, I’ll walk through some of these tasks by industry to show how a long context model, like AI21 Labs’ Jamba-Instruct model—with a 256K context window—is necessary for high quality and reliable output.

Finance

Use case: Extracting business insights from earnings calls

As part of gathering and synthesizing intelligence about a given company’s performance, investment analysts will comb through their quarterly earnings calls, using the updates shared there to make recommendations to portfolio or investment managers. The transcripts of these calls can be around 19 pages—equivalent to around 6K tokens.

What 256K means: A 256K context window can put the data of 32 earnings call transcripts—8 years’ worth of financial reporting—at your fingertips.

The value: Instead of manually pouring over these documents, or feeding them year by year to a model, the model processes 8 years of financial data as one unit, improving the comprehensiveness of its analysis and increasing the chances it can spot important trends and patterns that emerge over time.

Let’s take a look at a sample prompt and output, generated based on the transcripts of seven publicly available earnings calls from Nike:

Prompt

Task: what are the financial results most talked about between the CEO and the analysts in these calls. Explain what the analysts are trying to understand. Be specific. include 4 such points, and the details from the calls referring to them.

Output

- Revenue and earnings growth: Analysts are interested in understanding the company’s revenue and earnings growth in each quarter. For example, in Q3 and Q4 2023, John Donahoe emphasized that the company exceeded its plan and the financial numbers are in line with expectations, showing solid growth.

- Inventory levels: The financial performance of the company is heavily influenced by the inventory levels. Analysts keenly follow the inventory details, as they can reflect on the company’s market position and the efficiency in meeting consumer demand. For instance, in Q2 2024, the company indicated an improved inventory position and progress in managing inventory levels, reducing double digits from the prior quarter.

- Gross margin performance: Gross margins are indicative of a company’s profitability, reflecting how much it earns from sales after accounting for the cost of goods sold. Analysts are interested in tracking the shifts in gross margins over time as they could signal changes in the business’s underlying operational health. In Q2 and Q3 2024, Matt Friend highlighted the company’s successful achievement of gross margin expansion despite a highly promotional marketplace.

- Outlook and guidance for future quarters: The company’s financial outlook for future periods is a focus for the analysts. They try to understand the company’s expected performance, considering factors such as macroeconomic conditions, promotional activities, and changes in inventory levels. In Q3 2023, John Donahoe discussed their guidance for fiscal ’23, regarding expectations of reported revenue growth in the high single digits and a decline in gross margin.

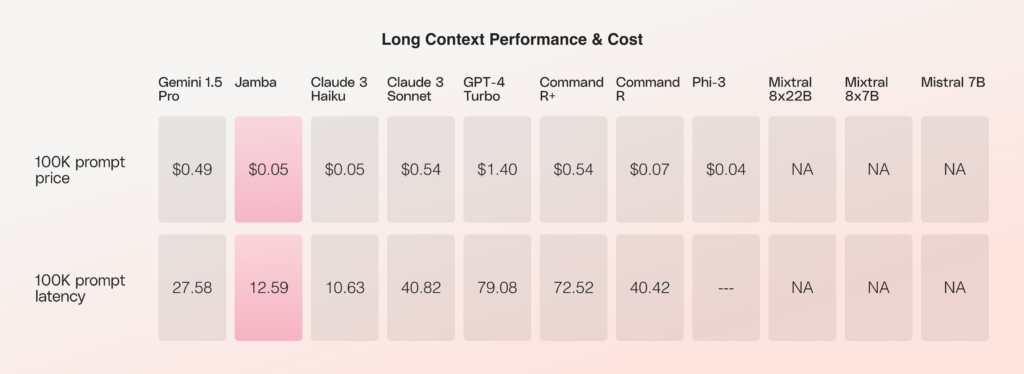

Not only can Jamba-Instruct handle a 100K prompt like this one, but it does so at a far more optimal price and latency than competitors, as seen in the chart below:

Use case: Summarizing financial documents

Financial analysts and investors, who are tasked with keeping up with the financial health of various companies, and sharing insights, projections, and recommendations on the basis of that data, find themselves regularly combing through lengthy financial documents. For example, 10-K reports for multinational corporations can easily reach around 100 pages each—clocking around 32K tokens per report.

What 256K means: A 256K context window can fit 8 of these reports at once; that’s 8 years worth of a company’s publicly available financial history.

The value: Offer employees a valuable research assistant tool to extract insights and notice patterns across hundreds, or even thousands, of pages of dense documents, easing the tediousness of redundant tasks and providing a valuable check on human error.

Healthcare and life sciences

Use case: Generating clinical trial materials

Every clinical trial a pharmaceutical company conducts has an accompanying evaluation brochure, which contains details all previous studies related to the drug or treatment under investigation, as well as the planned experiment; after the trial, it is updated to reflect the new findings, and is generally audited once a year for compliance to ensure all information is up to date. As these brochures can easily span 100 or 200 pages, and are written by and for clinicians, their length and language is not easily comprehensible by trial participants who wish to understand more about the trial they’re taking part in.

What 256K means: A 256K context window can hold the data of one 200-page IB or several shorter IBs.

The value: A long context model can instantaneously synthesize the main, need-to-know points in the entire IB and convey them in jargon-free language that patients can understand, allowing researchers to quickly and reliably create patient-facing materials that support with trial recruitment. Furthermore, a long context model could also be used to incorporate the trial’s findings into the pre-existing IB, according to the standardized format.

Use case: Summarizing clinical trial interviews

Interviews with trial participants are one of the most important sources of research data in a clinical trial. Often lasting around one hour, these interviews must then be carefully reviewed by researchers to identify the reported impact of the treatment, as well as to report any adverse side effects to the proper regulatory bodies, such as the FDA. A transcript from a one hour interview can reach around 10K tokens.

What 256K means: A 256K context window can fit around 25 one-hour interviews.

The value: In addition to reaching publication more quickly by cutting down the need to manually review hundreds of pages of interview transcripts for every trial, the ability to conduct analysis across an entire cohort of participant interviews can increase the ability of researchers to spot patterns that might otherwise go undetected.

Retail

Use case: Product description generator

In order to generate new product descriptions at scale, companies need to be able to quickly transform raw lists of product details into brand-aligned product descriptions. The product details, several examples of the desired product description outcome, and guidelines on style, tone, length, and structure can amount to anywhere from 10-20K.

What 256K means: There’s room to input multiple products at once or requests several variations on product descriptions for A/B testing or persona customization purposes.

The value: With retailers able to scale and maintain their product inventory with ease, they can now focus on creative marketing optimizations.

Customer support

Use case: Customer-facing chatbot

To build a chatbot application that can reliably and accurately answer customer questions, a RAG (retrieval-augmented generation) solution is almost always needed. This mechanism allows the model to ground its answer in the information contained in your own help center articles or other form of an organizational knowledge base. For every question a customer asks the chatbot, the model will pull a defined range of relevant articles and generate its response accordingly. For example, if a model references ten articles, each around 5 pages, per question, that can quickly amount to 50 pages per question. For industries in which the documents referenced are even lengthier—such as insurance—the total number of pages a model needs to refer to per question goes up even higher.

What 256K means: With a 256K context window, a model can handle around 15 thorough question-answer exchanges with a customer—intaking the question, scanning relevant documents, and composing an answer that is in line with the company’s tone and style instructions.

The value: In addition to supplying customers with reliable information drawn directly from your organizational knowledge base, the model’s ability to handle such a long-ranging conversation also improves the customer experience. Unlike model’s with shorter context windows in which the window is maxed out after one question-answer exchange, a model with a long context window can build upon details shared earlier, creating a cohesive conversation and delivering the most relevant answers to the customer.

Use case: Internal agent assistant

Similarly to the customer-facing chatbot, RAG-powered internal chat applications can be valuable tools to customer support representatives or employees who need to access answers in real-time. With enterprise knowledge bases comprising thousands, or even millions, of documents, from product documentation to training manuals and from employee handbooks to meeting notes, manually searching by keyword, for example, often doesn’t cut it. We’ve all had that moment when we’re trying to find a certain piece of information, and suddenly it’s 20 minutes later and we’re still deciphering which file or article is the most current and relevant source of truth.

What 256K means: By pairing a model with a 256K context window with RAG, the model is able to scan up to 800 pages of organizational data.

The value: This tool helps employees avoid manually searching through several articles or files for the right answer, improving workflow processes across teams and, in the case of customer support, building brand loyalty.

These are some of the most frequently appearing use cases I hear about in my day to day, yet of course many more examples exist—and many new ones are appearing as companies understand the value of what long context LLMs can provide and race to implement.

If you have an idea of how one of these use cases can support your business, or want to explore another use case idea, let’s talk.