Table of Contents

Enterprise AI: Beyond Experimentation, Toward Real Deployment

The Enterprise AI Reality Check

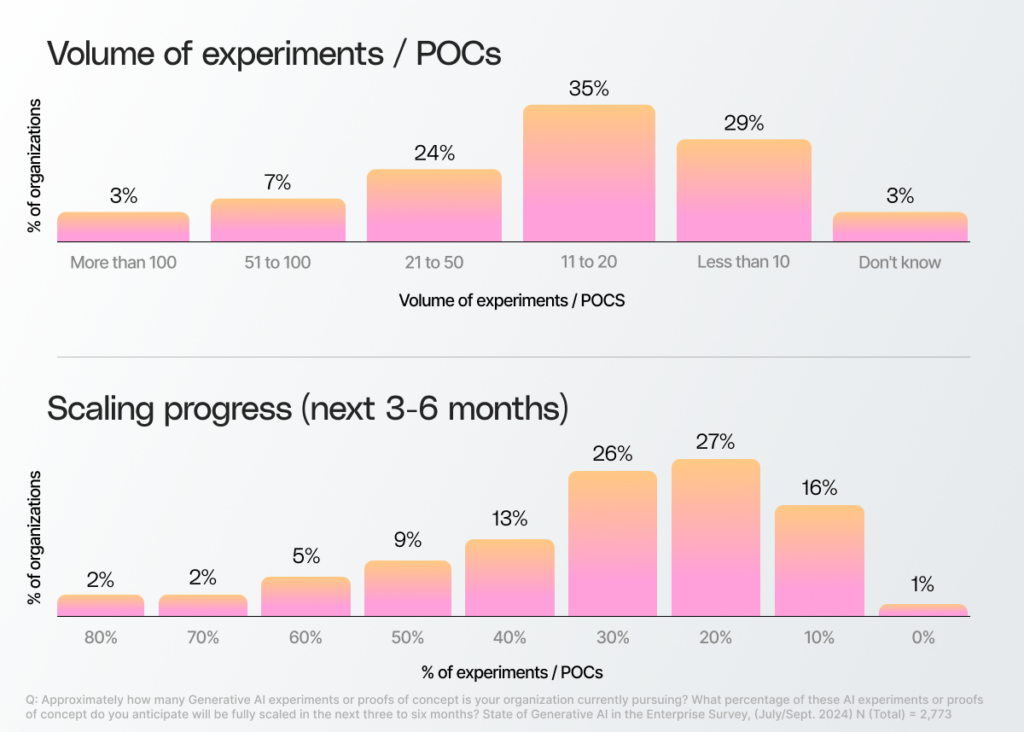

The AI industry just had another breakthrough moment. DeepSeek, a Chinese AI startup, built a model that outperforms OpenAI’s o1 on benchmarks while slashing costs. Impressive? Yes. Game-changing? That depends on how you define progress. The AI race remains focused on leaderboard performance, debating who hits the highest benchmark scores, or the lowest per-token cost. Enterprises do not work in controlled test environments. They need more than a model that is slightly better at reasoning on synthetic datasets. They need reliable AI systems they can trust, systems that work seamlessly with their workflows, integrate with existing tools, produce predictable outputs, and drive real business value. Yet most AI projects never reach that stage. Dataiku and Deloitte report that only 20-30% of GenAI projects ever make it to production. This is not innovation. It’s a bottleneck.

For years, enterprises have relied on two limited approaches to tame AI’s unpredictable nature. One approach is to “prompt and pray”. The other is to build static, hard-coded chains around large language models in an attempt to add layers of validation and correction. These methods may improve performance but they require a heavy investment in time and resources. Now, the AI stack is evolving into more than just an LLM with some extra constraints.

Why LLMs Alone Fall Short in the Enterprise

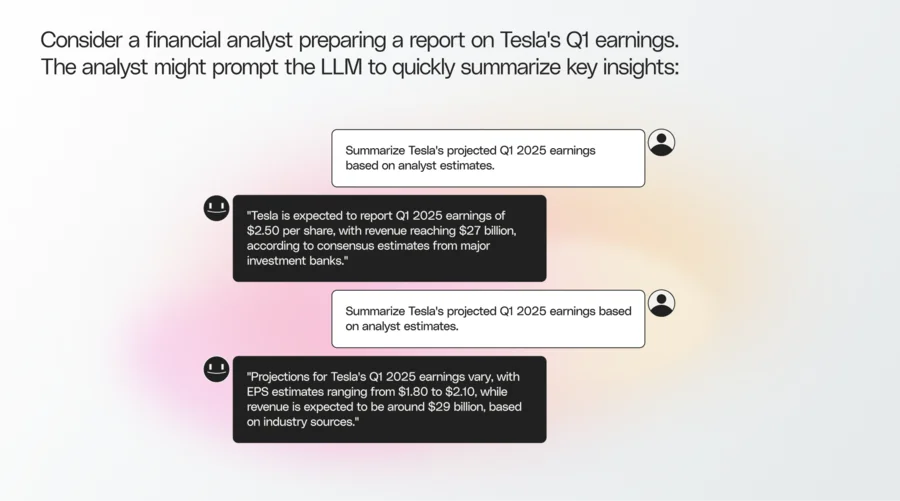

Large language models excel at pattern recognition, reasoning, and synthesis. However, they are unreliable by design because they generate responses in a probabilistic way. This introduces hallucinations, and the same prompt may produce entirely different answers each time. A dealbreaker for the enterprise. A slightly off-brand marketing email is one thing. A financial projection that misses by millions or a misclassified medical condition is a real-world failure.

Which response is correct? The inconsistencies go beyond different numbers. These errors compound when an LLM handles complex, multi-step tasks, and the hallucinations may multiply through layers of decision-making, leading to outcomes that no enterprise can risk.

Enterprises have long attempted to stabilize AI outputs with fine-tuning, various RAG techniques, and prompt engineering tricks. Yet even these methods do not eliminate the core issue: LLMs on their own cannot guarantee a desired output, making them untrustworthy for tackling critical use cases. As Ori Goshen, Co-CEO of AI21 Labs, puts it:

“Neither DeepSeek’s R1 nor other leading models address the core challenges of controlling output precision and optimizing resource efficiency. Both struggle in nontrivial workflows. There are no shortcuts. AI systems—not just LLMs—are needed to tackle real-world enterprise use cases.”

The higher the stakes, the greater the need for trust, control, and traceability.

The Shift – From LLMs to AI Systems

Scaling models is only part of the equation for future AI success. The true challenge is building comprehensive systems that weave AI into structured decision-making. An enterprise AI system should not be limited to answering questions. Dynamic orchestration and planning are needed to decide when to retrieve factual data, when to offload calculations and tasks to specialized tools, and when to rely on human oversight. Whether developers validate outputs by using ‘prompt and pray’ or by manually building static chains, the goal remains the same. They must overcome the inherent limitations of LLMs.

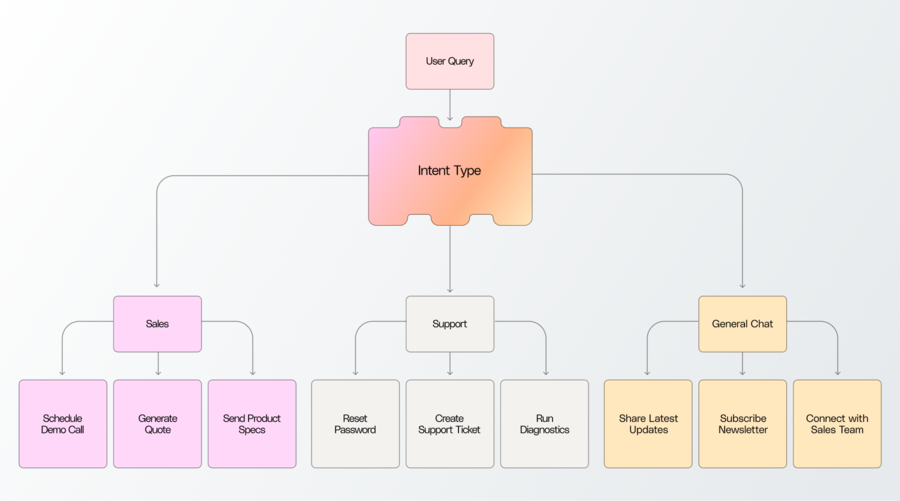

Let’s see how an AI system would work for a SaaS company. While an LLM might answer a customer’s query about pricing based on past interactions, a fully orchestrated AI system goes further, identifying intent, retrieving real-time product updates, integrating with CRM data, and routing complex requests to the right team, ensuring accurate and actionable responses.

For businesses to get real value, the AI system needs to operate within defined parameters, balancing automation with precision, optimizing for efficiency without runaway expenses, and ensuring responses meet business needs without unnecessary overhead.

This shift is already underway, and the enterprises adopting early will realize the ROI on AI and lead the market.

Cut the Hype, Ask the Right Questions

Many are branding this transition as the rise of “AI agents.” But not all AI agents are created equal.

Plenty of today’s “agent” solutions are little more than LLM-based chatbots with a fancy UX. While these models take actions, retrieve documents, or call APIs, they still remain unpredictable.

In 2025, enterprises need to cut through the hype and ask the right questions:

- Controllable – How do I ensure the output aligns with my needs?

- Reliable – Does it consistently produce correct outputs?

- Integration – Can it connect to structured data, APIs, tools, and enterprise systems?

- Traceability – Can you audit how the AI reached its decision?

For years, enterprises have treated AI as a playground—running pilots, testing use cases, and experimenting with models. Now, they’re ready for the next step into dynamic, controllable integrated systems that deliver on AI’s promise.

We’ve been building AI for real-world enterprise needs for the past eight years. We focus on systems, not just models — because that’s what businesses actually need to move from experimentation to execution.