Table of Contents

Build with Jamba-Instruct on Snowflake Cortex

As part of its ongoing partnership with Snowflake, AI21 is thrilled to announce the upcoming integration of its state-of-the-art large language models with the Snowflake Data Cloud, starting with its latest and most advanced Foundation Model, Jamba-Instruct.

The model will soon be available in Snowflake Cortex, a fully-managed service that enables customers to analyze text data and build AI applications using a range of industry-leading LLMs, AI models, and vector search capabilities.

The partnership between AI21 and Snowflake Cortex democratizes access to the cutting-edge technology, placing powerful GenAI use cases securely within reach for all Snowflake customers. As Baris Gultekin, Head of AI at Snowflake, shares: “With AI21’s groundbreaking Jamba-Instruct offered in Cortex AI, our customers will now be able to seamlessly connect their data to build transformative GenAI applications with AI21’s powerful models.

Snowflake Cortex

AI21 is pleased to partner with Snowflake Cortex due to their commitment to streamlining the path to GenAI application development and deployment for all of their customers—whether they are advanced developers working in Python or analysts using SQL.

As a fully-managed service built into the Snowflake Data Cloud, Snowflake Cortex supports their customers with:

- Seamless deployment of LLMs: Snowflake Cortex is natively integrated with the Snowflake Data Cloud, providing a single platform to simplify the connection between your organizational data and GenAI models.

- Secure data access: As Snowflake Cortex deploys within the secure perimeter of the Data Cloud, your data remains fully within Snowflake, and the LLM you deploy with Cortex benefits from Snowflake’s existing security, scalability, and governance capabilities.

- Infrastructure management: Snowflake Cortex optimizes the complex GPU infrastructure needed to run and scale GenAI solutions.

This makes using Jamba-Instruct as simple as writing a basic LLM function directly within Snowflake Cortex’s environment. As a result, customers can confidently use our models to focus on what matters most to them: building and innovating.

Building with Jamba-Instruct on Snowflake Cortex

AI21’s debut model on Snowflake Cortex, Jamba-Instruct, is a Foundation Model built from groundbreaking hybrid architecture. As the world’s first production-grade model to combine the conventional Transformer architecture with novel SSM architecture, it harnesses the advantages of both to achieve best-in-class performance and quality.

Jamba-Instruct excels across several significant milestones:

Longest context window in its size class

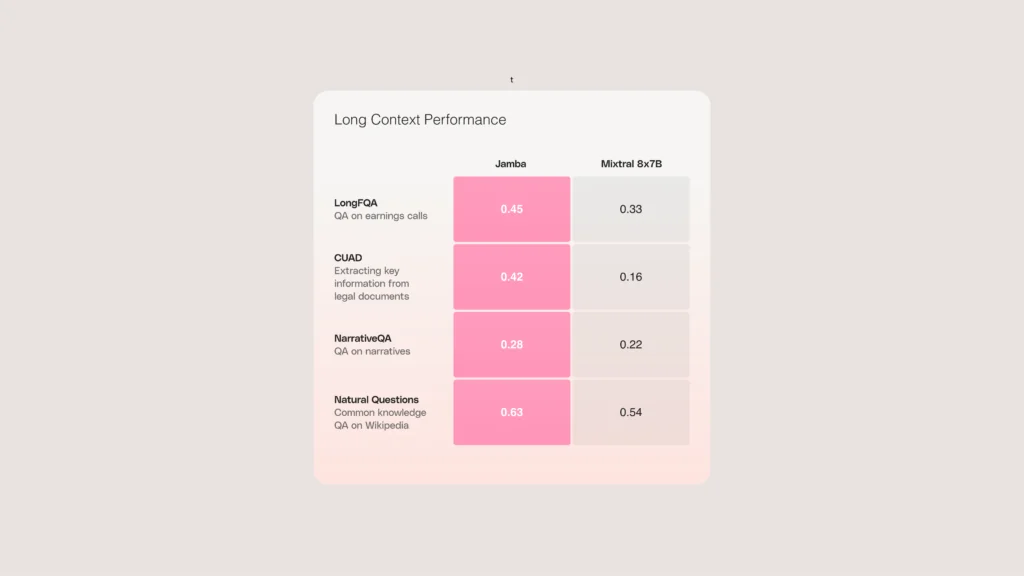

With a 256K context window, Jamba-Instruct effectively handles the kind of long context use cases that are so valuable to enterprises, outperforming competitor Mixtral 8x7B across long context use case benchmarks. Roughly equivalent to a 800 page novel, a 256K context window opens up new opportunities to build powerful GenAI workflows at unprecedented scales.

For example, a financial institution can enable automated term sheet generation across many call transcripts, thereby increasing productivity, reducing human error, and absorbing draining, repetitive tasks so employees can focus on more strategic projects. Jamba-Instruct can also be used to implement a customer-facing chatbot that, due to its long context window, can maintain extended conversations that remain coherent over time.

Behind the scenes, the model can also support a robust and sophisticated RAG Engine mechanism, able to draw on and process more of the company’s organizational knowledge base at once so that answers are grounded and accurate. These are just a few examples of the ways Jamba-Instruct’s unprecedented context window length supports enterprises with the kinds of strategic use cases and innovative GenAI applications.

Cost efficiency

Offered at a highly competitive price point and achieving impressive quality benchmarks across the board, Jamba-Instruct is the clear model of choice for companies looking to build reliable GenAI applications at scale without ballooning costs.

By bringing Jamba-Instruct to Snowflake Cortex, AI21 is excited to make this cutting-edge and cost-efficient technology highly accessible to a wide range of innovators—no AI or ML engineering required. In partnering with Snowflake Cortex, we at AI21 further our commitments to widening access to the LLMs needed to power high-value GenAI enterprise use cases and removing common barriers to production at scale by bringing our technology to Snowflake’s community of analysts and developers.

To learn more and be the first to access Jamba-Instruct on Snowflake Cortex, please contact us.