6 Reasons Why Enterprises Struggle with AI Integration

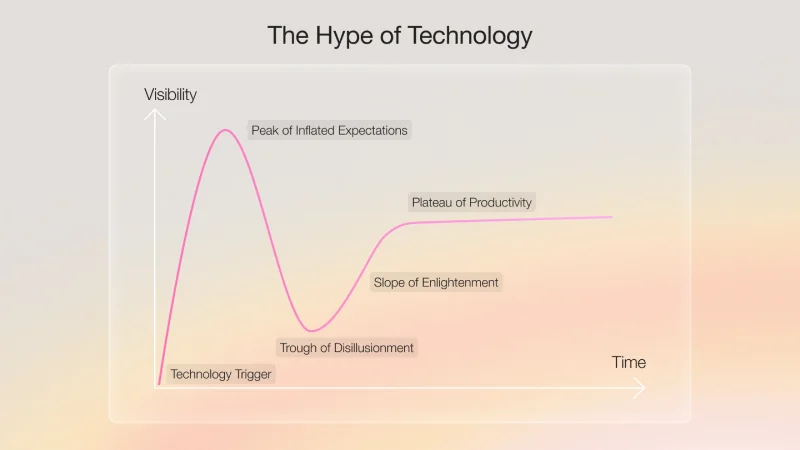

Nearly two years have passed since ChatGPT was released, showcasing the amazing capabilities of large language models (LLMs). Soon after, GitHub Copilot came out and quickly became essential for modern programmers. Every day, we hear about companies developing AI and launching countless beta versions.

However, ChatGPT and Copilot (and their competitors) are mainly used by tech-savvy users who can monitor the AI-generated outputs. While we occasionally hear about internal use cases in organizations with minimal potential damage, my aunt, as an end user, is still waiting for more practical features. She wants automatic WhatsApp or Facebook feed summaries, Gmail’s automated email writing, Spotify to offer podcast highlights before listening, or Netflix to ask a few questions about your preferences for recommendations.

These are all use cases anyone could think of, even without AI expertise, and they all share the fact that they supposedly don’t need advanced techniques like finetuning, Retrieval-Augmented Generation (RAG), or agents — just well-crafted prompts. A proof of concept (PoC) for them could be built in ten minutes, but almost two years have passed, and none of these features exist. An article by the Washington Post even claims that the hype bubble is deflating.

So, what prevents AI from being integrated into every software product we use today?

It’s Not About Latency & Cost: Contrary to popular belief, LLMs are quite affordable. For example, generating the whole Harry Potter series, which took J.K. Rowling, 17 years, would take GPT-4 less than a few hours and cost only about $15. In a business context, a single product description for an ecommerce shop, which typically takes a few hours and costs a company $10-20, would take a few seconds and cost just one cent.

It’s Not About Hallucinations: While hallucinations were initially the main problem with LLMs, advancements like retrieval-augmented generation (RAG) have greatly reduced their frequency Additionally, modern LLMs often indicate when they lack sufficient information, making outright hallucinations rare. With mechanisms like guardrails to cross-check content and the immense value these models provide, the remaining risk of hallucinations is manageable. By clearly communicating potential inaccuracies to users, we can responsibly leverage AI’s benefits while keeping users informed and vigilant.

What are the barriers?

As the Head of Solutions at one of the world’s leading LLM companies, I’ve had the opportunity to work with many customers over the past two years and learn about the gaps that lead to the slow adoption of this technology. Here is what I learned:

1. Knowledge Gap

People often overestimate AI’s capabilities. For instance, GPT-4, despite its sophistication, can’t even count the ‘R’s in the word “strawberry”. The ChatGPT product, with its added features like internet search and image analysis, creates the illusion that all LLMs can perform complex, independent tasks. I worked with one customer, who implemented a model and expected it to cross-check input with general knowledge, images and internet data, warning the user of any potential discrepancies. However, without explicit instructions and a proper application layer with function calling support, the LLM by itself can’t do this. Even tech-savvy users who have already experimented with LLMs, still believe they need to finetune the model with their own data to make it work on their use case. However, model finetuning is usually resource-intensive and unnecessary. Instead, prompt engineering is a more efficient way to tailor the model’s responses without altering it.

2. Lack of product creativity

Despite executives viewing AI as a game-changer, their teams often remain stuck in conventional thinking, unable to fully harness its potential. Product managers often stick to the chat interface, largely due to the success of ChatGPT, but not every product needs to rely on chat. Consider Microsoft Excel: its intuitive GUI allows users to perform complex tasks with simple clicks and cell selections. Replacing this with a chat interface, where you’d have to describe each cell in words, would turn a straightforward task into a frustrating guessing game of battleship. For products with well-defined functions, chat can be more of a hindrance than a help. This is why, even with ChatGPT’s versatility, users still love AI tools with user-friendly interfaces like Wordtune.

3. Data Privacy Concerns

LLMs rely on data from prompts to function, but strict privacy regulations like GDPR and HIPAA prevent companies from sharing sensitive information with third parties. While on-premises solutions address this for companies with high volume and sufficient compute resources and hardware, smaller companies might consider private deployments to their VPC as a more cost and resource efficient option. While cloud-based tools like Amazon Bedrock keep data within the customer’s environment, they aren’t fully mature, especially for complex tasks like RAG. As a result, companies face delays, either waiting for these tools to evolve or navigating lengthy approval processes for third-party vendors.

4. Technological Gap

While hallucinations are mostly addressed, LLMs still face issues due to their non-deterministic nature—it’s challenging to manage a product that gives slightly different answers each time. For instance, asking the model for JSON can result in varying output formats, that makes the response parsing almost impossible. Explainability is also key, as it’s not always clear why the model chose one response over another, which slows down development. Additionally, assigning complex tasks to LLMs is difficult, especially when accuracy is critical. For example, if I ask the model to generate an SQL query, a minor error like using “>” instead of “>=” could lead to a wrong answer. Imagine asking “How many movies has Disney released since 2020?” and getting 117 instead of the correct 139 because of this mistake. Without seeing the generated query, users can’t verify the answer, which limits the solution’s reliability.

5. Evaluation Challenge

Evaluating text is a hard task. Despite countless automatic benchmarks and daily releases of new models that outperform their competitors on these benchmarks, when it comes to evaluating if a model is good for my use case, there’s no substitute for manually sampling a large and representative set of questions and reviewing the answers one by one to see if the outputs meet the desired standards. This is usually where customers struggle significantly. In classic machine learning, whether it’s classification or regression, there are relatively simple automated methods to check the model’s accuracy. However, with LLMs there are no magic solutions. One must work hard until convinced that the solution is good enough.

6. Expectations and Timelines

One of the biggest challenges in AI integration is the gap between expectations and reality, particularly concerning timelines. With LLMs, creating a PoC can take just five minutes, leading customers to expect a fully functional product within a few weeks. However, moving a PoC to a production-grade solution requires comprehensive planning, development, and testing. It involves not just setting up the LLM with a prompt and serving it to the end user – just like any other application, building an AI application requires careful definition of the input and the output and the relationship between them, defining metrics and collecting data, breaking the task into smaller subtasks which will be easier for the model, implementing every component and integrating them with each other and with other systems and tools, ensuring the solution meets specific use case requirements, and thoroughly testing it to handle various scenarios accurately and consistently. Each step is crucial to ensure the solution is reliable, secure, and delivers consistent value – but the discrepancy between the rapid PoC and the lengthy development process has a negative impact on the customers’ trust in this technology.

Getting back to the starting point—two years have passed since this technology became available and well-known, yet my aunt is still waiting for her features. Investors are likely to be disappointed, expecting returns on their investments by now. However, no new technology has become dominant within two years—not the internet, social media, nor the cloud. So, while this might seem like a bubble that’s about to burst, the truth is that even if it does, the technology is here to stay, much like the dot-com bubble. It’s here because it already brings real value—after all, it helped me write this article.

I’m not just here to state the problems. Look for the solutions to these challenges in our upcoming ebook.

Stay tuned on LinkedIn, Twitter, Facebook, or sign up for our newsletter.