Table of Contents

What is a Transformer Model? Components, Innovations & Use Cases

Businesses struggled for years with AI systems that failed to grasp conversational context, resulting in frustrated customers and severely limited automation possibilities.

Traditional AI models processed text word by word, creating a critical bottleneck that prevented natural language understanding (NLU). Transformer models solved this fundamental problem by analyzing entire text sequences simultaneously, fundamentally changing how AI understands human language.

This article explores transformer models in depth, covering their definition, capabilities, core components, recent innovations, and practical applications in transforming industries from customer service to healthcare.

What is a transformer model?

A transformer model is a type of artificial intelligence (AI) system designed to work with language. It can analyze vast amounts of written material and learn patterns, like which words tend to appear together or how the structure of a sentence works. This allows it to produce text that often sounds natural and relevant.

Earlier AI systems like recurrent neural networks (RNN) could process text but struggled with computational efficiency when handling longer sequences. They couldn’t easily connect information across distant parts of a text, which limited their effectiveness in tasks where understanding context is crucial.

Modern systems called large language models (LLMs) are built using transformer technology. These models have significantly improved AI’s ability to generate realistic and helpful text.

Why are transformers important?

Transformers excel at processing text while maintaining context within their context window, unlike many other AI models that process words sequentially and struggle with longer sequences.

This advancement enables more sophisticated AI applications across multiple domains:

- In enterprise search, traditional systems might process a query like “Can you pick up an order for someone else?” by focusing only on the keywords “order” and “someone else.” Transformer-powered search understands the entire query as being about third-party pickup policies.

- When properly implemented with context management techniques, transformer-based AI assistants can provide more coherent responses throughout a conversation, even as topics evolve or change.

- In language translation, transformers consider the surrounding context to choose the right meaning for ambiguous words. For example, they can distinguish when the French “avocat” means “lawyer” versus “avocado” based on the surrounding text.

- For medical and legal document processing, transformers’ ability to handle longer texts helps ensure critical details, such as patient histories, medications, and diagnoses, are captured while analyzing and summarizing complex documents.

What can transformer models do?

Transformers efficiently process text and structured data sequences, maintaining context better than many traditional models. Their versatility makes them valuable across multiple industries:

- In legal and financial services, transformers analyze complex contracts and assist with fraud detection by processing transaction data alongside traditional statistical methods.

- For customer service, they power chatbots that can understand and respond in multiple languages, making conversations feel more natural.

- In healthcare, they help doctors by organizing patient information from medical records and can assist with analyzing medical images to help detect diseases.

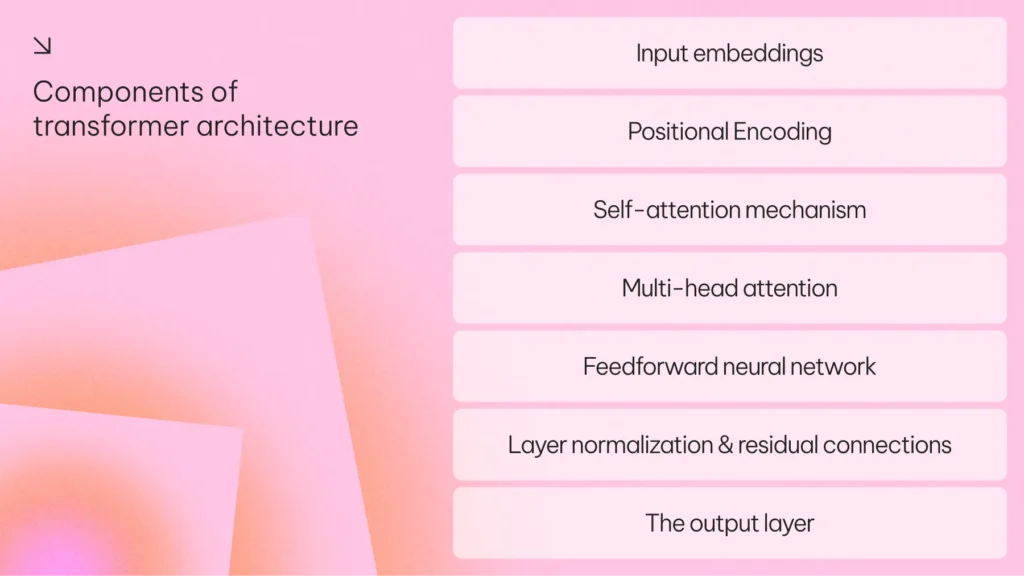

Components of transformer architecture

A transformer model consists of several key components that allow it to process and generate text efficiently.

Input embeddings

Input embeddings enable AI to analyze and generate human-like text, words, and phrases by converting them into numerical form so mathematical models can process them.

Instead of treating words as isolated symbols, input embeddings map words with similar numerical values. For example, in contract analysis, words like “agreement” and “contract”, allow AI to recognize their connection.

Positional Encoding

Positional encoding assigns numerical values to each word’s position in a sequence, helping the model recognize order and structure.

This is critical for areas like financial transaction monitoring, where the sequence of events could indicate suspicious activity, such as a sudden large withdrawal followed by multiple international transfers.

Self-attention mechanism

Self-attention allows the transformer to weigh the importance of different words within the same sentence.

For example, In customer service automation, self-attention helps AI-powered chatbots understand the true intent behind customer queries. If a customer types, “My server is down, and I need urgent assistance,” the transformer identifies “server” and “urgent assistance” as key elements and routes the request to a high-priority technical team.

Learn more about attention mechanisms in language models.

Multi-head attention

Multi-head attention allows the model to examine multiple input aspects simultaneously, improving accuracy and relevance.

For instance, in an HR database, if a user searches for “intellectual property agreements we hold at ACME Inc.,” different attention heads might focus on “intellectual property,” “agreements,” and “ACME Inc. agreements” separately. This ensures that the search results include everything relevant.

Feedforward neural network

The feedforward network applies mathematical transformations to information from the self-attention mechanism and uses it to make better, more relevant predictions.

For example, in e-commerce personalization, a transformer model might recognize that a user frequently searches for fitness-related products. The feedforward network then processes this data, learning that users with similar behavior tend to purchase running shoes next. Based on this pattern, the AI recommends running shoes as the next likely purchase.

Layer normalization & residual connections

Layer normalization prevents extreme values from disrupting learning and keeps the model stable, while residual connections help the model retain critical information.

Both techniques are essential in applications like loan approvals. A transformer analyzing a loan application must consider historical financial data, credit scores, and market trends. Layer normalization ensures the model processes this data consistently, while residual connections help retain critical information, preventing errors in assessing risk.

The output layer

The output layer finalizes the model’s predictions and generates the final response. For example, after processing documents, market insights, and risk factors, the output layer can deliver a concise, data-driven report to executives, helping them make informed decisions quickly.

Key transformer innovations and variants models

First introduced in the 2017 paper “Attention is All You Need” by Vaswani et al., transformer models have reshaped natural language processing (NLP) by enabling AI systems to better understand and generate human language at scale. Since then, a number of influential transformer variants have emerged, each driving key advancements across the field.

BERT (Bidirectional Encoder Representations from Transformers)

Google integrated BERT into its search engine in 2019 to better interpret natural language queries. BERT helps AI understand the meaning of words based on their context in a sentence, improving the relevance of search results, especially for conversational or ambiguous queries.

GPT (Generative Pre-trained Transformer)

The GPT series—particularly GPT-3—can generate highly human-like text by predicting and producing language one token at a time based on context. These models power various AI applications, including chatbots, virtual assistants, and content-generation tools.

T5 (Text-to-Text Transfer Transformer)

T5 frames every NLP task, such as translation, summarization, or question answering, as a text-to-text problem, where both input and output are plain text. This unified approach makes it highly flexible and effective across a wide-range of AI applications.

How transformers differ from other neural networks

Transformers represent a significant shift from earlier neural network architectures like RNNs (Recurrent Neural Networks) and CNNs (Convolutional Neural Networks).

RNNs process input sequentially, making them suitable for early speech recognition and natural language processing tasks. However, they struggle with retaining long-range context and are difficult to scale efficiently. CNNs are primarily used in computer vision and power tasks like facial recognition for smartphone authentication.

Unlike RNNs and CNNs, transformers analyze entire input sequences at once using self-attention, which allows them to capture complex relationships in data more effectively. This makes them especially well-suited for enterprise applications such as contract analysis, AI chatbots, and multilingual translation. That said, their large size and resource requirements can pose scalability challenges in production environments.

Transformer model use cases

Transformers are transforming multiple industries by enabling AI models to process large volumes of text, images, and structured data with greater accuracy and efficiency. Their ability to handle long-range dependencies and generate human-like responses has made them essential for automation, decision-making, and predictive analytics.

- Finance: In finance, transformers are widely used for fraud detection and risk assessment. JPMorgan Chase’s own LLM Suite integrates OpenAI’s ChatGPT, which is built on transformer models and uses a self-attention mechanism to help prevent fraud before it happens.

- Retail: Amazon leverages transformer models to power its recommendation engine and product search functionality. The models analyze customer browsing history, purchase patterns, and product relationships to deliver highly personalized shopping experiences.

- Healthcare: In 2023, Google DeepMind introduced MedPaLM 2, a transformer-based large language model fine-tuned on medical datasets. It achieved expert-level performance on U.S. medical licensing exam questions and demonstrated the ability to provide helpful and accurate answers to medical queries. The model is being explored for use in clinical decision support, patient triage, and summarizing complex medical documents to assist healthcare professionals.

Limitations and challenges of transformers

Transformers can be demanding in terms of computing power, especially when working with long pieces of text or data. They require a lot of memory and processing time, which can drive up infrastructure costs for organizations trying to scale AI solutions. As a result, they may not be ideal for real-time, high-speed applications like customer service chatbots, medical diagnostics, or financial risk assessments.

One of the main reasons for this is how transformers analyze input. Instead of reading information step by step, they compare every part of the input with every other part to understand context. This process becomes much slower and more resource-intensive as the input grows, creating delays in time-sensitive situations.

To help overcome these challenges, newer versions of transformer models are being developed that aim to be more efficient, especially when handling large or complex inputs.

Future directions for transformer models

As AI adoption continues to grow, enterprises need scalable and cost-effective solutions. To overcome these limitations, researchers are developing more efficient transformer architectures that reduce computational demands while enhancing performance. Key innovations include:

- Mixture of Experts (MoE): A modular approach where only a subset of the model’s components are activated during each task. This reduces unnecessary computation and lowers infrastructure costs without compromising accuracy.

- Sparse Attention Mechanisms: These limit the amount of data the model processes at once by selectively attending to the most relevant information, improving speed, and reducing memory usage.

- Retrieval-Augmented Generation (RAG): Combines transformers with external knowledge sources, allowing models to access up-to-date information without needing to store everything internally, improving accuracy while keeping model sizes manageable.

These advancements may enable businesses to scale AI deployments more effectively while improving efficiency, accuracy, and adaptability.